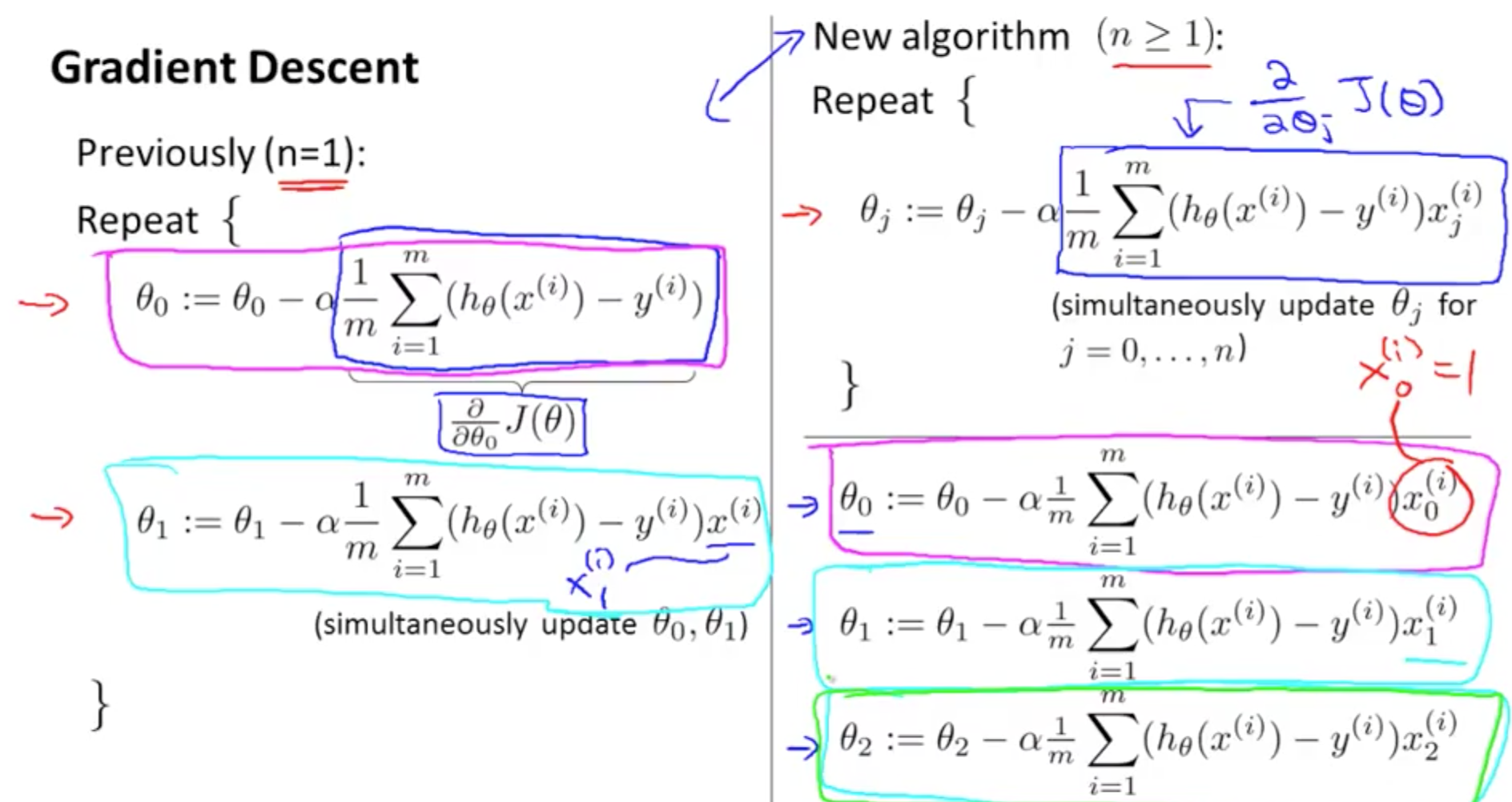

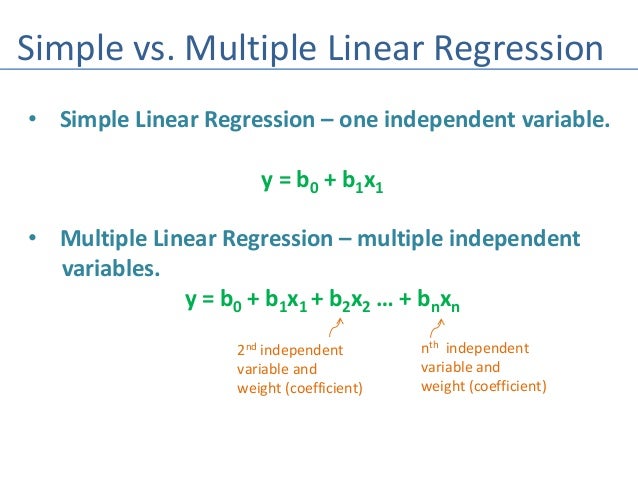

Where S represents the standard deviation and N represents the total number of data points Summary SE represents the standard error of estimation which can be estimated using the following formula: M0 is the hypothesized value of linear slope or the coefficient of the predictor variable. M is the linear slope or the coefficient value obtained using the least square method The formula for the one-sample t-test statistic in linear regression is as follows: This test is used when the linear regression line is a straight line. The following diagram represents the null hypothesis:Ī one-sample t-test will be used in linear regression to test the null hypothesis that the slope or the coefficients of the predictor variables is equal to zero. Assuming that the null hypothesis is true, the linear regression line will be parallel to X-axis such as the following, given Y-axis represents the response variable and the X-axis represent the predictor variable. The slope or the coefficient of the predictor variable, m = 0 represents the hypothesis that there is no relationship between the predictor variable and the response variable. In a simple linear regression model such as Y = mX + b, the t-test statistics are used to determine the following hypothesis: This can be determined by examining the t-test statistic. However, in some instances, the linearity of the linear relationship may not be appropriate. In most cases, linear regression is an excellent tool for prediction. The linear regression model is used to predict the value of a continuous variable, based on the value of another continuous variable. The t-test statistic helps to determine how linear, or nonlinear, this linear relationship is. The linearity of the linear relationship can be determined by calculating the t-test statistic. Why is a t-test used in the linear regression model? An example of multiple linear regression is Y = aX + bZ.

Categorical variables, such as religion, major field of study or region of residence, need to be recoded to binary (dummy) variables or other types of contrast variables.

Also, consider 95-percent-confidence intervals for each regression coefficient, variance-covariance matrix, variance inflation factor, tolerance, Durbin-Watson test, distance measures (Mahalanobis, Cook and leverage values), DfBeta, DfFit, prediction intervals and case-wise diagnostic information. For each model: Consider regression coefficients, correlation matrix, part and partial correlations, multiple R, R2, adjusted R2, change in R2, standard error of the estimate, analysis-of-variance table, predicted values and residuals.For each variable: Consider the number of valid cases, mean and standard deviation.Assumptions to be considered for success with linear-regression analysis:

0 kommentar(er)

0 kommentar(er)